Neural radiance fields (NeRFs) are quickly gaining traction in the world of Deep Learning and are becoming the hot topic of discussion. Ever since their inception in 2020, there has been an explosion of papers, as demonstrated by the submissions for CVPR 2022. Time magazine, meanwhile, has included a variation of NeRFs, called instant graphics neural primitives, in their list of the best inventions of 2022. But what exactly are NeRFs and what are their applications?

In this article, I will attempt to demystify various terminologies such as neural fields, NeRFs, neural graphic primitives, and so on. As a sneak peek, these terms all refer to the same concept, depending on who you ask. I will also offer an analysis of how these concepts work by examining the two most influential papers.

What is a neural field?

The term “neural field” was popularized by Xie et al. and describes a neural network that parametrizes a signal, typically a single 3D scene or object. Neural fields find applications in various areas such as image synthesis and 3D reconstruction, among others. They have been applied to generative modeling, 2D image processing, robotics, medical imaging, and audio parameterization.

Neural fields encode objects or scenes’ properties using fully connected neural networks. One key aspect is that each neural network needs to be trained to encode a single scene. In contrast with standard machine learning, these networks are overfitted to a particular scene. Essentially, neural fields embed the scene into the network weights.

Why use neural fields?

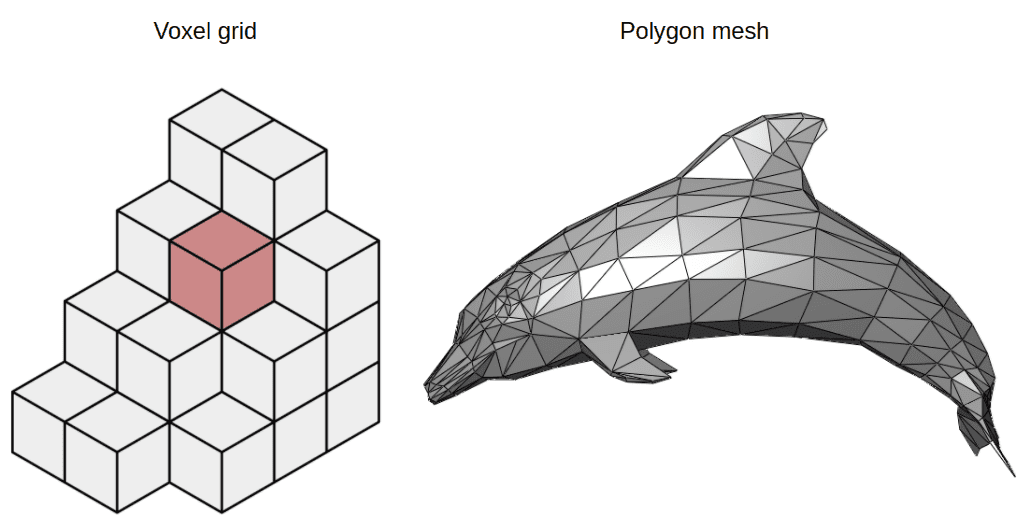

Neural fields are efficient and compact 3D representations of objects or scenes, particularly well-suited for computer graphics applications. They offer several advantages over traditional representations like voxels or meshes. Unlike voxels or meshes, neural fields are differentiable and continuous, have arbitrary dimensions and resolutions, and are domain-agnostic, making them compatible with various tasks.

Voxels vs Polygon meshes. Source: Wikipedia on Voxels, Wikipedia on Polygon Meshes

What do fields stand for?

In physics, a field is a quantity defined for all spatial and/or temporal coordinates, represented as a mapping from a coordinate to a quantity, typically a scalar, vector, or tensor. Fields in NeRFs follow similar principles and can be applied to various fields of study.

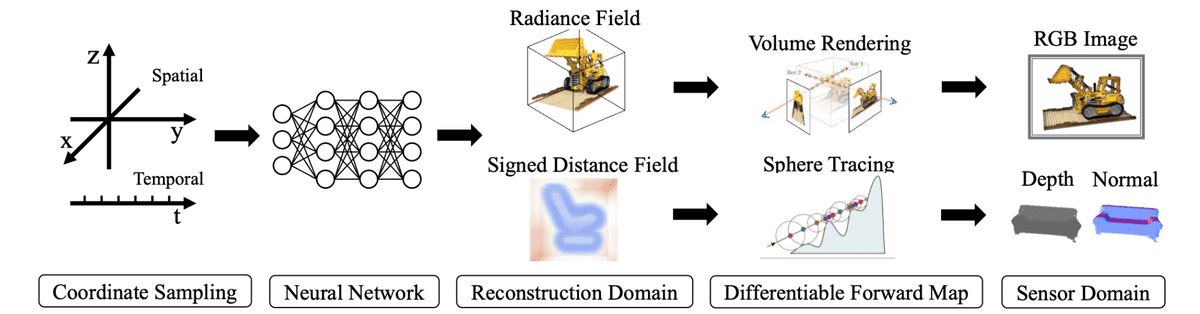

Steps to train a neural field

The typical process of computing neural fields, as outlined by Xie et al., involves sampling coordinates of a scene, feeding them to a neural network to produce field quantities, sampling the field quantities from the desired reconstruction domain of the problem, mapping the reconstruction back to the sensor domain (e.g., 2D RGB images), and calculating the reconstruction error to optimize the neural network.

A typical neural field algorithm. Source: Xie et al.

For a more detailed explanation of NeRFs, watch this video by Yannic Kilcher.

Instant Neural Graphics Primitives with a Multiresolution Hash Encoding

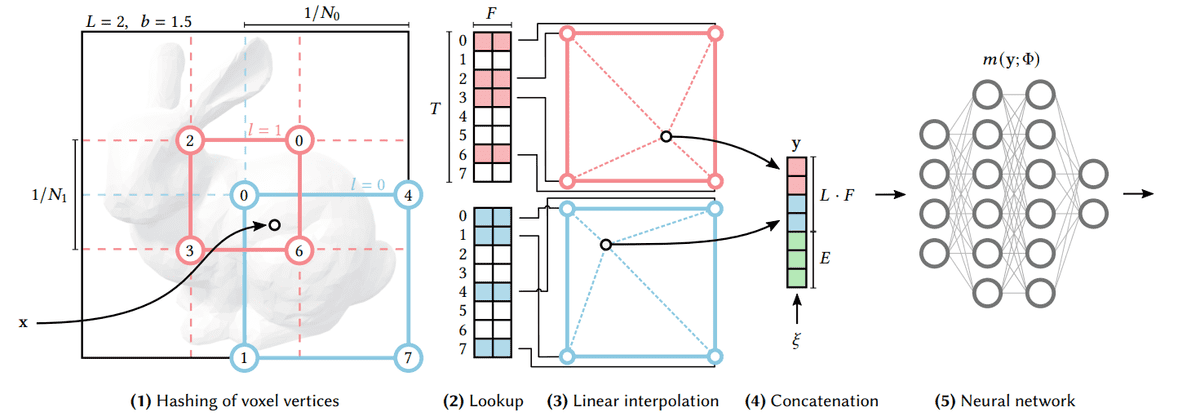

Another important paper following the NeRFs trend is the “Instant Neural Graphics Primitives” proposed by Muller et al. The authors at Nvidia have managed to dramatically reduce the training time from hours to mere seconds by employing a novel representation called multiresolution hash encoding. This encoding allows the use of small neural networks, reducing the total floating point operations required. Also noteworthy is that “neural graphics primitives” is just a different terminology for neural fields.

Multiresolution Hash Encoding

Multiresolution hash encoding is the main highlight of the paper. It involves using training encoding parameters alongside the main network parameters, recruiting feature vectors, and arranging them into different resolution levels stored at the grid vertices. This approach allows the network to learn both coarse and fine features, boosting the quality of the final results. The benefits of this encoding include an automatic level of detail and task-agnostic encoding of 3D space with feature vectors. The entire process is fully differentiable.

video.

video.

Conclusion

NeRFs have emerged as one of the most exciting applications of neural networks in recent years. Their potential to render 3D models in a matter of seconds was previously inconceivable. It won’t be long before we see these architectures enter the gaming and simulation industries.

If you’d like to delve deeper into NeRFs, visit the instant-ngp repo by Nvidia and experiment with creating your own models. Additionally, if you’re interested in learning more about computer graphics, feel free to let us know on our discord server. Finally, if you enjoy our blogposts, consider supporting us by purchasing our courses or books.