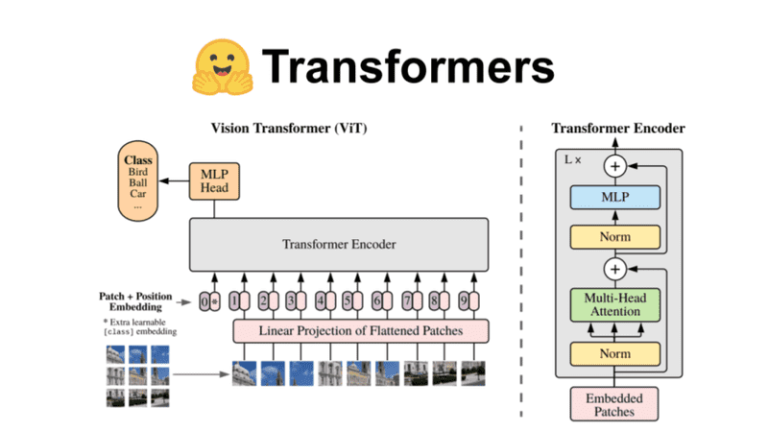

This article provides a comprehensive tutorial of the Hugging Face ecosystem. The tutorial covers the different libraries developed by the Hugging Face team, including transformers and datasets, and demonstrates how they can be utilized to develop and train transformers with minimal boilerplate code. The tutorial also presents a full pipeline for building and training a Vision Transformer (ViT). The tutorial assumes prior knowledge of the architecture and emphasizes key concepts related to ViT, which include representing images as sequences of patches and training the model using a labeled dataset following a fully-supervised paradigm.

The article then dives into an in-depth examination of the Hugging Face ecosystem. It discusses the fundamental principles of the libraries that encompass the entire machine learning pipeline, from data loading to training and evaluation. Key topics explored include Datasets, Metrics, Transformers, Pipelines, Data Collator, and Model Definition.

To load a dataset, the article introduces the use of the load_dataset function and how it can be used to obtain datasets from the Hugging Face Hub, read from a local file, or load from in-memory data. The article covers other dataset operations and features, such as sorting, shuffling, filtering, and mapping.

Metrics are also explored with a focus on how datasets.Metric objects can be loaded from the Hub or defined as custom metrics in separate scripts.

The article then delves into Transformers and discussions around the core functionalities it offers for building, training, and fine-tuning transformers. It explains the utility of Pipelines as an intuitive way to use a model for inference and the abstraction it provides for a variety of tasks.

Model preparation is discussed, including the use of pre-trained transformer models, the process of model definition, and the approach to modeling outputs. Training the model using the Hugging Face Trainer class and key training arguments are also introduced in the tutorial.

The tutorial wraps up with a section on evaluating the model using the Trainer object and the predict function to obtain model outputs and metrics. The article also highlights additional features provided by the transformers library, including centralized logging, debugging capabilities, and the use of auto classes to simplify the process of loading pre-trained models and tokenizers.

Finally, the article provides an acknowledgment section and a conclusion that reflects on the contributions of the Hugging Face team to AI research and expresses optimism for further expansion into vision-related fields.

Throughout the article, relevant code examples and references to external resources are provided to enhance understanding and reinforce the learning experience.

Deep Learning in Production Book 📖

Gain insights into building, training, deploying, scaling, and maintaining deep learning models. Understand ML infrastructure and MLOps using hands-on examples. Learn more

* Disclosure: Please note that some of the links above might be affiliate links, and at no additional cost to you, we will earn a commission if you decide to make a purchase after clicking through.