“`html

BigML offers a chat support interface, where our users get their questions about our platform answered quickly. Recently, an academic user reached out explaining that he wanted to integrate our local model predictions with the explanation plots provided by the SHAP library. Fortunately, BigML models are white-box and can be downloaded to any environment to produce predictions there. This was totally doable with our Python bindings as is, but his question made us realize that some users might prefer a more streamlined path to handle the same scenario. So we just went ahead and built that, which is what this blog post covers in detail.

Understanding predictions

We know that creating a predictive model can be quite simple, especially if you use BigML. We also know that only certain models are simple enough to be interpretable as they are. Decision trees are a good example of that. They provide patterns expressed in the form of if-then conditions that involve combinations of the features in your dataset. Thanks to that, we can interpret how features impact what the prediction should be when computing the result, given their importance values. The feature contributions can be expressed as importance plots, like the ones available for models and predictions in the BigML‘s Dashboard.

Feature Importance Plot on BigML Dashboard

Feature Importance Plot on BigML Dashboard

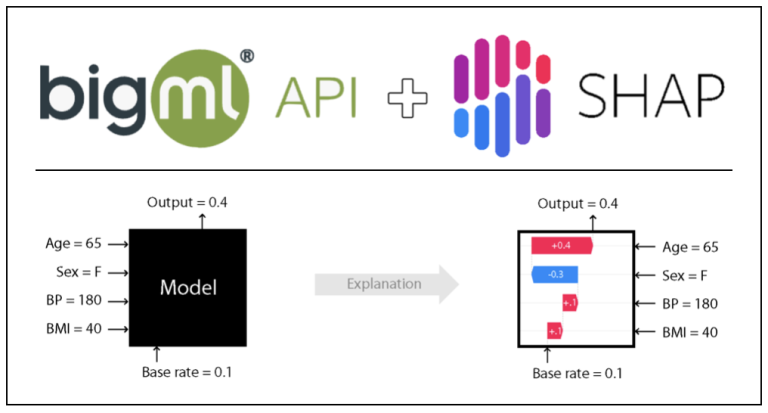

However, as complexity increases, the relationship between the features provided as inputs in your dataset and the target (or objective) field can become quite obscure. Using game theory techniques, ML researchers have found a common-ground way to compute feature importances and their positive or negative contribution to the target field. Of course, I’m referring to SHAP (SHapley Additive exPlanations) values.

There’s plenty of information about SHAP values and their application to Machine Learning interpretability, so we’re going to assume that you’re familiar with the core concept and instead focus on how to use the technique on BigML‘s supervised models. The entire code for the examples used in this post can be found in the following jupyter notebook and you can find step-by-step descriptions there. Here, we’ll mainly highlight the integration bits so that you can quickly get the hang of it.

Regression explanations

We’ll start simple and do some pricing prediction using the California House Pricing file. It contains data about the medium price of houses in California and their features, like their age, the number of bedrooms or bathrooms, etc. In the notebook, the information is loaded in a Pandas DataFrame.

“`