Generative artificial intelligence (AI) applications built around large language models (LLMs) have demonstrated the potential to create and accelerate economic value for businesses. Examples of applications include conversational search, customer support agent assistance, customer support analytics, self-service virtual assistants, chatbots, rich media generation, content moderation, coding companions to accelerate secure, high-performance software development, deeper insights from multimodal content sources, acceleration of your organization’s security investigations and mitigations, and much more. Many customers are looking for guidance on how to manage security, privacy, and compliance as they develop generative AI applications. Understanding and addressing LLM vulnerabilities, threats, and risks during the design and architecture phases helps teams focus on maximizing the economic and productivity benefits generative AI can bring. Being aware of risks fosters transparency and trust in generative AI applications, encourages increased observability, helps to meet compliance requirements, and facilitates informed decision-making by leaders.

The goal of this post is to empower AI and machine learning (ML) engineers, data scientists, solutions architects, security teams, and other stakeholders to have a common mental model and framework to apply security best practices, allowing AI/ML teams to move fast without trading off security for speed. Specifically, this post seeks to help AI/ML and data scientists who may not have had previous exposure to security principles gain an understanding of core security and privacy best practices in the context of developing generative AI applications using LLMs. We also discuss common security concerns that can undermine trust in AI, as identified by the Open Worldwide Application Security Project (OWASP) Top 10 for LLM Applications, and show ways you can use AWS to increase your security posture and confidence while innovating with generative AI.

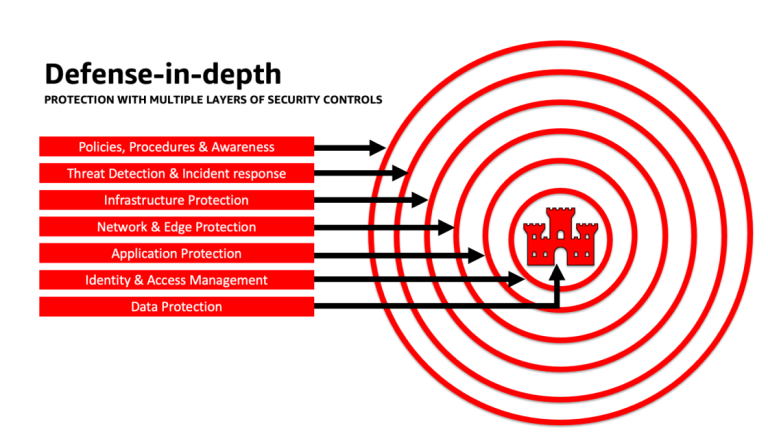

This post provides three guided steps to architect risk management strategies while developing generative AI applications using LLMs. We first delve into the vulnerabilities, threats, and risks that arise from the implementation, deployment, and use of LLM solutions, and provide guidance on how to start innovating with security in mind. We then discuss how building on a secure foundation is essential for generative AI. Lastly, we connect these together with an example LLM workload to describe an approach towards architecting with defense-in-depth security across trust boundaries.

By the end of this post, AI/ML engineers, data scientists, and security-minded technologists will be able to identify strategies to architect layered defenses for their generative AI applications, understand how to map OWASP Top 10 for LLMs security concerns to some corresponding controls, and build foundational knowledge towards answering the following top AWS customer question themes for their applications:

Improve security outcomes while developing generative AI

Innovation with generative AI using LLMs requires starting with security in mind to develop organizational resiliency, build on a secure foundation, and integrate security with a defense in depth security approach. Security is a shared responsibility between AWS and AWS customers. All the principles of the AWS Shared Responsibility Model are applicable to generative AI solutions. Refresh your understanding of the AWS Shared Responsibility Model as it applies to infrastructure, services, and data when you build LLM solutions.

Start with security in mind to develop organizational resiliency

Start with security in mind to develop organizational resiliency for developing generative AI applications that meet your security and compliance objectives. Organizational resiliency draws on and extends the definition of resiliency in the AWS Well-Architected Framework to include and prepare for the ability of an organization to recover from disruptions. Consider your security posture, governance, and operational excellence when assessing overall readiness to develop generative AI with LLMs and your organizational resiliency to any potential impacts. As your organization advances its use of emerging technologies such as generative AI and LLMs, overall organizational resiliency should be considered as a cornerstone of a layered defensive strategy to protect assets and lines of business from unintended consequences.

Organizational resiliency matters substantially for LLM applications

Although all risk management programs can benefit from resilience, organizational resiliency matters substantially for generative AI. Five of the OWASP-identified top 10 risks for LLM applications rely on defining architectural and operational controls and enforcing them at an organizational scale in order to manage risk. These five risks are insecure output handling, supply chain vulnerabilities, sensitive information disclosure, excessive agency, and overreliance. Begin increasing organizational resiliency by socializing your teams to consider AI, ML, and generative AI security a core business requirement and top priority throughout the whole lifecycle of the product, from inception of the idea, to research, to the application’s development, deployment, and use. In addition to awareness, your teams should take action to account for generative AI in governance, assurance, and compliance validation practices.

Build organizational resiliency around generative AI

Organizations can start adopting ways to build their capacity and capabilities for AI/ML and generative AI security within their organizations. You should begin by extending your existing security, assurance, compliance, and development programs to account for generative AI.

The following are the five key areas of interest for organizational AI, ML, and generative AI security:

Develop a threat model throughout your generative AI Lifecycle

Organizations building with generative AI should focus on risk management, not risk elimination, and include threat modeling in and business continuity planning the planning, development, and operations of generative AI workloads.

Ensure teams understand the critical nature of security as part of the design and architecture phases of your software development lifecycle on Day 1. This means discussing security at each layer of your stack and lifecycle, and positioning security and privacy as enablers to achieving business objectives.

At AWS, security is our top priority. AWS is architected to be the most secure global cloud infrastructure on which to build, migrate, and manage applications and workloads. This is backed by our deep set of over 300 cloud security tools and the trust of our millions of customers, including the most security-sensitive organizations like government, healthcare, and financial services. When building generative AI applications using LLMs on AWS, you gain security benefits from the secure, reliable, and flexible AWS Cloud computing environment.

Use an AWS global infrastructure for security, privacy, and compliance

When you develop data-intensive applications on AWS, you can benefit from an AWS global Region infrastructure, architected to provide capabilities to meet your core security and compliance requirements. This is reinforced by our AWS Digital Sovereignty Pledge, our commitment to offering you the most advanced set of sovereignty controls and features available in the cloud. We are committed to expanding our capabilities to allow you to meet your digital sovereignty needs, without compromising on the performance, innovation, security, or scale of the AWS Cloud.

Understand your security posture using AWS Well-Architected and Cloud Adoption Frameworks

AWS offers best practice guidance developed from years of experience supporting customers in architecting their cloud environments with the AWS Well-Architected Framework and in evolving to realize business value from cloud technologies with the AWS Cloud Adoption Framework (AWS CAF). Understand the security posture of your AI, ML, and generative AI workloads by performing a Well-Architected Framework review. Reviews can be performed using tools like the AWS Well-Architected Tool, or with the help of your AWS team through AWS Enterprise Support. The AWS Well-Architected Tool automatically integrates insights from AWS Trusted Advisor to evaluate what best practices are in place and what opportunities exist to improve functionality and cost-optimization. The AWS Well-Architected Tool also offers customized lenses with specific best practices such as the Machine Learning Lens for you to regularly measure your architectures against best practices and identify areas for improvement. Checkpoint your journey on the path to value realization and cloud maturity by understanding how AWS customers adopt strategies to develop organizational capabilities in the AWS Cloud Adoption Framework for Artificial Intelligence, Machine Learning, and Generative AI. You might also find benefit in understanding your overall cloud readiness by participating in an AWS Cloud Readiness Assessment. AWS offers additional opportunities for engagement—ask your AWS account team for more information on how to get started with the Generative AI Innovation Center.

Accelerate your security and AI/ML learning with best practices guidance, training, and certification

AWS also curates recommendations from Best Practices for Security, Identity, & Compliance and AWS Security Documentation to help you identify ways to secure your training, development, testing, and operational environments. If you’re just getting started, dive deeper on security training and certification, consider starting with AWS Security Fundamentals and the AWS Security Learning Plan. You can also use the AWS Security Maturity Model to help guide you finding and prioritizing the best activities at different phases of maturity on AWS, starting with quick wins, through foundational, efficient, and optimized stages. After you and your teams have a basic understanding of security on AWS, we strongly recommend reviewing How to approach threat modeling and then leading a threat modeling exercise with your teams starting with the Threat Modeling For Builders Workshop training program. There are many other AWS Security training and certification resources available.

Apply a defense-in-depth approach to secure LLM applications

Applying a defense-in-depth security approach to your generative AI workloads, data, and information can help create the best conditions to achieve your business objectives. Defense-in-depth security best practices mitigate many of the common risks that any workload faces, helping you and your teams accelerate your generative AI innovation. A defense-in-depth security strategy uses multiple redundant defenses to protect your AWS accounts, workloads, data, and assets. It helps make sure that if any one security control is compromised or fails, additional layers exist to help isolate threats and prevent, detect, respond, and recover from security events. You can use a combination of strategies, including AWS services and solutions, at each layer to improve the security and resiliency of your generative AI workloads.

Many AWS customers align to industry standard frameworks, such as the NIST Cybersecurity Framework. This framework helps ensure that your security defenses have protection across the pillars of Identify, Protect, Detect, Respond, Recover, and most recently added, Govern. This framework can then easily map to AWS Security services and those from integrated third parties as well to help you validate adequate coverage and policies for any security event your organization encounters.

Defense in depth: Secure your environment, then add enhanced AI/ML-specific security and privacy capabilities

A defense-in-depth strategy should start by protecting your accounts and organization first, and then layer on the additional built-in security and privacy enhanced features of services such as Amazon Bedrock and Amazon SageMaker. Amazon has over 30 services in the Security, Identity, and Compliance portfolio which are integrated with AWS AI/ML services, and can be used together to help secure your workloads, accounts, organization. To properly defend against the OWASP Top 10 for LLM, these should be used together with the AWS AI/ML services.

Layer defenses at trust boundaries in LLM applications

When developing generative AI-based systems and applications, you should consider the same concerns as with any other ML application, such as being mindful of software and data component origins and implementing necessary protections against LLM supply chain threats. Including insights from industry frameworks, Let’s move on more of a paragraph.

Analyze and mitigate risk in your LLM applications

In this section, we analyze and discuss some risk mitigation techniques based on trust boundaries and interactions, or distinct areas of the workload with similar appropriate controls scope and risk profile. In this sample architecture of a chatbot application, there are five trust boundaries where controls are demonstrated, based on how AWS customers commonly build their LLM applications. Your LLM application may have more or fewer definable trust boundaries. In the following sample architecture, these trust boundaries are defined as:

User interface interactions: Develop request and response monitoring

Detect and respond to cyber incidents related to generative AI in a timely manner by evaluating a strategy to address risk from the inputs and outputs of the generative AI application.

Generative AI applications should still uphold the standard security best practices when it comes to protecting data. Establish a secure data perimeter and secure sensitive data stores. Encrypt data and information used for LLM applications at rest and in transit. Protect data used to train your model from training data poisoning by understanding and controlling which users, processes, and roles are allowed to contribute to the data stores, as well as how data flows in the application, monitor for bias deviations, and using versioning and immutable storage in storage services such as Amazon S3. Establish strict data ingress and egress controls using services like AWS Network Firewall and AWS VPCs to protect against suspicious input and the potential for data exfiltration.

During the training, retraining, or fine-tuning process, you should be aware of any sensitive data that is utilized.