The speech synthesis is the conversion of speech from one modality to another, such as from text or lip movements. Text to Speech (TTS) systems aim to convert natural language into speech using rapid advancements in natural language systems in most applications.

There are different methods for speech synthesis, with two prominent ones being concatenation synthesis and parametric synthesis.

Concantenation synthesis involves the use of pre-recorded speech segments, which are combined to generate the desired speech. These segments can be in the form of full sentences, words, syllables, diphones, or individual phones. They are usually stored in waveforms or spectrograms, and are acquired through a speech recognition system. At run time, the system selects the best sequence based on the database of recorded segments, known as unit selection.

Parametric synthesis also used recorded human voices, but with the use of a function and set of parameters to modify the voice. During training, a statistical model is used to estimate parameters that characterize the audio sample, and during synthesis HMMs generate sets of parameters from the target text sequence. The parameters are used to synthesize speech waveforms. This approach is known to not always produce ideal speech quality

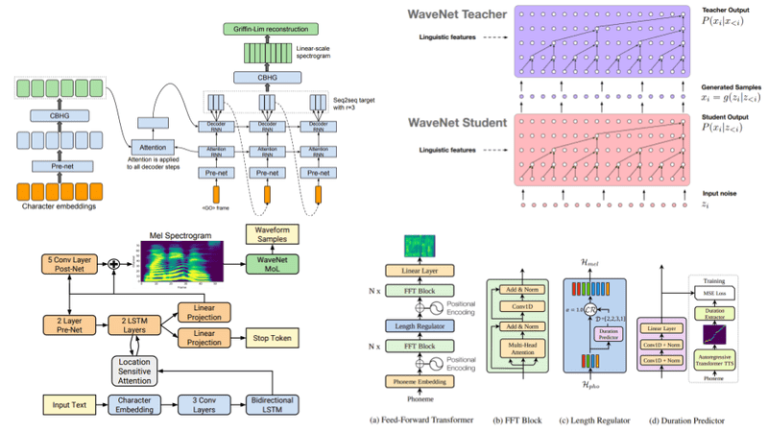

Some of the other methods described include WaveNet, Fast Speech, and EATS, all of which represent different approaches to TSS with their own tradeoffs and strengths.

These developments demonstrate the evolution of speech synthesis techniques and their effectiveness in various applications. If you’re looking to explore the field further, consider checking out some of the models available in Pytorch’s or TensorFlow’s model hubs, or resources such as Mozilla’s TTS: Text-to-Speech for all.