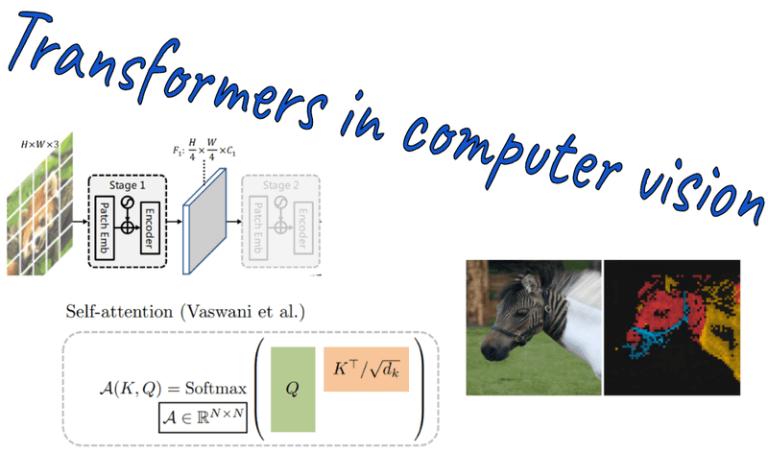

You are probably already aware of the Vision Transformer (ViT). What came after its initial submission is the story of this blog-post. We will explore multiple orthogonal research directions on ViTs. Why? Because chances are that you are interested in a particular task like video summarization. We will address questions like how can you adapt/use ViT on your computer vision problem, what are the best ViT-based architectures, training tricks and recipes, scaling laws, supervised vs self-supervised pre-training, etc.Even though many of the ideas come from the NLP world like linear and local attention, the ViT arena has made a name by itself. Ultimately, it’s the same operation in both fields: self-attention. It’s just applied in patch embeddings instead of word embeddings.

Source: Transformers in Vision Therefore, here I will cover the directions that I find interesting to pursue. Important note: ViT and its prerequisites are not covered here. Thus, to optimize your understanding I would highly suggest taking a decent look at previous posts on self-attention, the original ViT, and certainly Transformers. If you like our transformer series, consider buying us a coffee! DeiT: training ViT on a reasonable scale

Knowledge distillation

…

. . .

And that’s all for today! Thank you for your interest in AI. Writing takes me a significant amount of time to contribute to the open-source/open-access ML/AI community. If you really learn from our work, you can support us by sharing our work or by making a small donation. Stay motivated and positive! N.

Cited as:

. Transformers in Computer Vision, 2021. https://theaisummer.com/ https://github.com/The-AI-Summer/transformers-computer-vision

References Deep Learning in Production Book 📖 Learn how to build, train, deploy, scale and maintain deep learning models. Understand ML infrastructure and MLOps using hands-on examples. Learn more*

Disclosure: Please note that some of the links above might be affiliate links, and at no additional cost to you, we will earn a commission if you decide to make a purchase after clicking through.